In today’s VUCA world, disruption from unlikely competitors is omnipresent; industry changes occur in faster and shorter cycles; regulations, such as for data protection, are just around the corner; and time to market is forever shrinking. Virtual assistants are also completely changing the way consumers buy products, acting as gatekeepers by restricting choice through specific recommendations – and allowing people to buy products they have not even seen. Does this mean product packaging still matters? Will we still have some control over what we buy? Well, as research indicates that people actually like these algorithmic recommendations and often follow them, the use of such platforms is likely to keep growing and will continue to raise more and more questions of this kind.

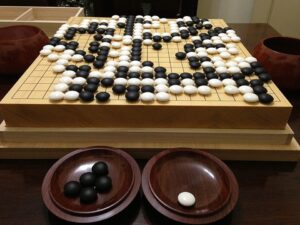

Figure 1: Game of Go

But do not fret – there is light at the end of the tunnel. Take Amazon. It knows what customers are going to buy before they go anywhere near the checkout, thanks to predictive analytics and tons of customer data. Data analytics and artificial intelligence make it possible to link data to gain insights on customers, grow the business, and optimize the speed and quality of logistics. And these new technologies are no longer the prerogative of “tech” firms. More and more companies are integrating such tools to navigate the turbulent waters and turn their ship around.

Data are necessary to feed algorithms, but avoid falling into the trap of “simply” collecting and storing more data. You need to be able to transform any data you collect into useful information, otherwise it is more likely to just waste resources and add even more complexity. A useful process to draw causal inference from big data could be divided into the following stages:

- Find interesting patterns in the data

- Explain those patterns (possibly using experimental manipulation in order to understand the link between cause and effects)

- Use those patterns and explanations.

The second step is probably the most important and most challenging one. As almost everything in the real world interacts with everything else, researchers have tremendous difficulties explaining patterns of correlation in the data. Could the advent of artificial intelligence (AI) and machine learning algorithms help?

World chess champion Gary Kasparov claimed he was the first person to have lost his job to AI when IBM’s Deep Blue beat him at chess. But Deep Blue was not really an AI. Rather, it was a supercomputer with the capacity to compute more moves ahead (200 million positions/second) than a human. It would thus be more accurate to say that Kasparov lost his job to brute force and Moore’s law rather than AI. Artificial intelligence is much more than brute force; it refers to a system able to imitate human intelligence. To highlight the difference, consider the paradox “We know more than we can tell” from Michael Polanyi, a mathematician philosopher. Indeed, many of the tasks we perform rely on intuition and tacit knowledge that are difficult to explain. A typical example is the capacity to recognize one’s mother. Babies and animals can do it effortlessly, but it is difficult to verbalize how we do it. So, if we cannot explain it, how can we teach a computer to do it?

Google DeepMind found a way around Polanyi’s paradox. In January 2016, the London-based company published a research paper explaining how AlphaGo, a Go-playing application it developed, used a combination of machine learning and tree search techniques to master the game of Go. In this ancient two-player board game, each player must try to capture more territory than their opponent on a 19×19 grid (see Figure 1). Despite relatively simple rules, the number of possible combinations in Go (2×10170) is larger than the number of atoms in the observable universe (2×1082). At present, no computer is powerful enough to solve this game using brute force and nobody can explain the game. Even the best Go players cannot articulate their tactics; they rely on intuition and heuristics such as “Do not use thickness to make territory.” This game is considered the most challenging of classic games for artificial intelligence.

Hence, when Lee Sedol, a professional Go player considered the best player in the world in the early 21st century, was challenged by AlphaGo, he was pretty confident: “… I think I will win the game by a near landslide.” But, AlphaGo is fundamentally different from earlier Go programs. It received very little direct instruction and was designed to learn on its own using thousands of past games played, thus developing what we could call a human-like form of intelligence. The game attracted intense interest: over 200 million people worldwide watched the best-of-five match between AlphaGo and Sedol in March 2016. AlphaGo defeated Sedol 4-1. On top of being a landmark scientific achievement, this match also offered striking examples of a computer developing intuition. Move 37 in game 2 was the most famous one. It was a highly inventive move that puzzled all Go players and experts at the time. It was only later in the game that it became apparent that it had been the critical move. Since then, it has been extensively examined by players and has brought a new perspective and knowledge to the game.

Business and personal impacts of AI and machine learning

Robotics, AI and machine learning (will) have social, political and business effects, transforming many modern industries and displacing jobs. A research paper published in 2017 estimated that 47% of all US jobs are at “high risk” of being automated in the next 20 years. Does that mean technology will be a net job destroyer? Past revolutions have in fact brought increased productivity and resulted in net job creation. Of course, the nature of work has constantly evolved over time; some tasks have been delegated to technology while new tasks have emerged. It is still unclear what tasks AI will create, but current trends predict some jobs are reasonably “safe” in the short term, particularly those requiring:

- Extensive human contact

- Social skills

- Strategic and creative thinking

- Being comfortable with ambiguity and unpredictability.

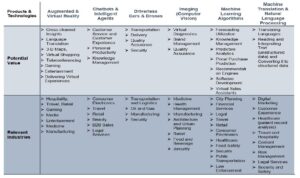

It is common to view new technologies as competitors rather than complementary to humans, especially amidst growing fear that AI threatens our employment. The reality is that machines are better than us at crunching numbers, memorizing, predicting, and executing precise moves; robots relieve us of tedious, dangerous and physically demanding tasks. A study has even shown that computer-based personality judgements are far superior to those of humans. Based on “Facebook likes,” a computer-based prediction tool was able to beat a human colleague after just 10 likes. It needed 70 likes to beat a friend or roommate, 150 to beat a family member, and 300 to beat a spouse. But AI cannot (yet) replace humans when creativity, perception and abstract thinking are required. Hence, AI systems can serve as partners that can augment and improve many aspects of work and life. Table 1 provides examples of products and AI-related technologies and their potential relevant industries. In a data-centric world, these systems can synthesize tons of information and help us make better-informed decisions. They can also free up time that we can then spend doing what is valuable to us.

In this context, Universal Basic Income is often cited as a way to redistribute the benefits of these technologies, but it is still unclear how that would be funded.

Table 1: Examples of technologies, products and industries

Opening the AI black box

As more and more tasks and decisions are delegated to algorithms, there is growing concern about where responsibility will lie. For example, who is responsible when an algorithmic system, initially implemented to improve fairness in employee performance assessment, ends up reinforcing existing biases and creating new forms of injustice? And who is accountable for the algorithmic decisions when human lives are at stake, as in recent accidents involving self-driving cars? Should we differentiate between decisions taken by an AI vs. a human being? Humans are not expected to justify all their decisions, but there are many cases where they have an ethical or legal obligation to do so.

And that is where the shoe pinches. Advanced algorithms can be so complex that even the engineers who created them do not understand their decision-making process. Consider deep neural networks, a type of machine learning method inspired by the structure of the human brain. All you do is feed the algorithms with some inputs and let the algorithm figure out the output. We have no idea what goes on in between. As illustrated in Figure 2, there are many different pathways that could lead to the outcome, and most of the “magic” happens in the hidden layers. Moreover, this “magic” could even imply a process of information that is completely different from that of the human brain. A famous illustration of this reality is Facebook’s experience with negotiating bots, which, after several rounds of negotiations, realized that it was not necessary to use a human language to bargain.

Coming back to the question of algorithmic accountability: How do we assess the trustworthiness of algorithmic decisions when the algorithm’s decision-making process is a black box? What kind of technical/legal/policy-oriented mechanisms should we implement as a solution? A straightforward option is to design these algorithms so that their “thought process” is “human-readable.” If we could understand how these algorithms make their decisions, we could also potentially adjust their “thinking” to match humans’ legal, moral, ethical and social standards, thus making them accountable under the law.

Figure 2: Deep neural network

From narrow to general AI

So far, our discussion on AI has focused on “narrow AI,” which is specialized by design to perform a specific task. But what about artificial general intelligence (AGI), which could perform any cognitive task as well as a human. What would that look like? Would it have its own character/emotions? Imagine this AGI has been trained by looking at all human histories. Have we always been kind to each other? Have we always treated people equally? If this machine’s input is our history, why would it behave differently to us? Imagine we simply asked an AGI to calculate the number pi. What would prevent this machine from killing us at some point to create a more powerful machine to calculate pi (i.e. to carry out our instruction)? After all, in creating past civilizations, most humans did not really care about the ants they killed on the way, so why would an AGI care about humans and their rules? “Just pull the plug,” we hear you say. But if the AGI is smarter than you, it will have anticipated that and found a way around it – by spreading itself all over the planet for example. For now, this question is, of course, highly philosophical. Max Tegmark, author of the book Life 3.0 identified the following schools of thought depending on one’s opinion on what AGI would mean for humanity and when (if ever) it comes to life:

- The Techno Skeptics: The only type that think AGI will never happen.

- The Luddites: Strong AI opponents who believe AGI is definitely a bad thing

- The Beneficial AI Movement: Harbor concerns about AI and advocate AI-safety research and discussion in order to increase the odds of a good outcome.

- The Digital Utopians: Say we should not worry, AGI will definitely be a good thing.

Where would you situate yourself?

So, what next?

The question is not whether you are “for” or “against” AI – that’s like asking ancestors if they were for or against fire. (Max Tegmark)

Throughout history, we have kept our technologies beneficial. These new technologies will be part of our daily lives, so the best strategy is to be proactive and learn how to control and manage them. And who knows, maybe AI will soon be your colleague.

Discovery Events are exclusively available to members of IMD Nexus. To find out more, go to www.imd.org/nexus